June 25, 2018

Artificial Intelligence in the Spotlight for Mind Bytes 2018

“Artificial intelligence” is making a serious comeback. When AI mostly failed to live up to its 20th century hype, the term fell out of favor. But today, as scientists, businesses, and society start to grapple with the enormous potential of machine learning, data science, and other computational techniques, artificial intelligence is back in vogue.

At the 4th annual Mind Bytes symposium, hosted by the University of Chicago Research Computing Center (RCC) on May 8th, this modern vision of artificial intelligence gained definition. While a panel of UChicago faculty and industry researchers most directly addressed the event’s theme of “Solving Societal Challenges with Artificial Intelligence,” the day’s talks and posters all tied back to an expanded idea of AI: using computers to augment human thought and supercharge the scientific process.

By any name, that’s what the RCC has achieved for UChicago researchers since its founding in 2012. Currently, thousands of campus users draw upon RCC resources, expertise, and training in their work, said RCC director H. Birali Runesha, with representatives from practically every department, division, and school.

“We bring together researchers from every corner of university who share an interest in high-performance computing and data to advance the state of the art of their field in new ways,” Runesha said. “These skills and perspectives are becoming essential today in conducting transformative research.”

Lightning talks from faculty that utilize RCC resources demonstrated the versatility of computational approaches, with presentations on climate and infectious disease modeling, financial market prediction, and the simulation of cellular motors and proteins. Still broader were the posters on display, which ranged from cancer genetics, brain atlases, and Chinese text analysis to studies of teen pregnancy, voter turnout, and social networks.

But the rest of the day’s programming suggested that these early successes are only appearing at the dawn of a new AI era. A keynote by Paul Blase of Speciate AI -- a data analytics consulting firm -- provided a fly-by of how businesses increasingly use machine learning, graph analysis, and computer vision to cut operating costs, improve customer interactions, find new markets, and prevent fraud. But these new approaches will affect more than just ledger lines, and the audience discussion of Blase’s talk moved into weightier consideration of how increased automation will impact global unemployment and economic inequality.

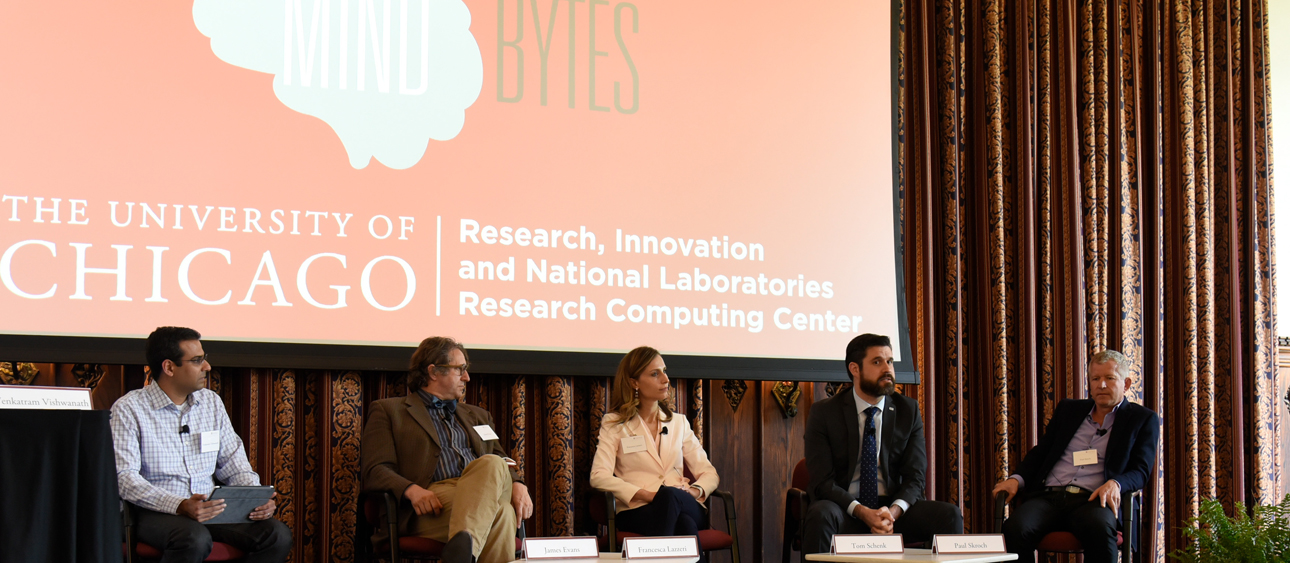

The double-edged sword of AI also hung over the Mind Bytes panel, which combined optimism about new data-driven solutions with warnings about the misuses and limitations of techniques we’re only just beginning to understand. With representation from academia (UChicago sociology professor James Evans, director of Knowledge Lab), industry (Microsoft’s Francesca Lazzeri and Paul Skroch of agricultural biotech firm Benson Hill) and government (City of Chicago Chief Data Officer Tom Schenk), the panel triangulated the current and future state of AI applications.

The panel’s conversation added further clarity to what artificial intelligence can help with in the near future. Evans argued that AI is most useful for “problems humans seem to be uniquely bad at,” such as identifying harmful drug interactions or the best combination of therapies to treat particular types of cancer. Other examples included signal detection in streaming data from sensor networks, real-time image processing to help people with visual disabilities navigate their surroundings, and the automatic generation of scientific hypotheses based on deep analysis of past literature.

Moderator Venkatram Vishwanath, a computer scientist at Argonne National Laboratory, also queried panelists on their biggest concerns around AI as its usage grows. A common refrain was the “black box” effect of many machine learning and data analytic techniques, which often make predictions without explaining causality. In order to convince skeptical stakeholders and build public trust and accountability, AI methods need to be more transparent about how they work, Schenk said.

“People need to understand what are the marginal effects of the different components for them to buy into it, because they need to develop that narrative,” Schenk said. “We also need to do constant experiments to understand what things are actually known to have causal impacts, instead of just looking at correlations, which might be spurious over a long time.”

Particularly when tackling social challenges, AI users must make sure that they’re using data truly representative of the real world and models free from hurtful biases, Lazzeri said. No matter how the “intelligence” portion of the term is described, the “artificial” half needs to be supplemented with established ethical principles such as fairness, inclusion, privacy, and transparency.

“You have to take what makes you human into equation, and work on problems that you can say actually generate social good,” said Skroch. “You have to bring your humanity to the table, it’s not going to come from the technology without you...what makes us human is what’s going to make this technology have social good.”